Distill4Geo: Streamlined Knowledge Transfer from Contrastive Weight-Sharing Teachers to Independent, Lightweight View Experts

Muhammad Haad Zaid, Murtaza Taj

Abstract:

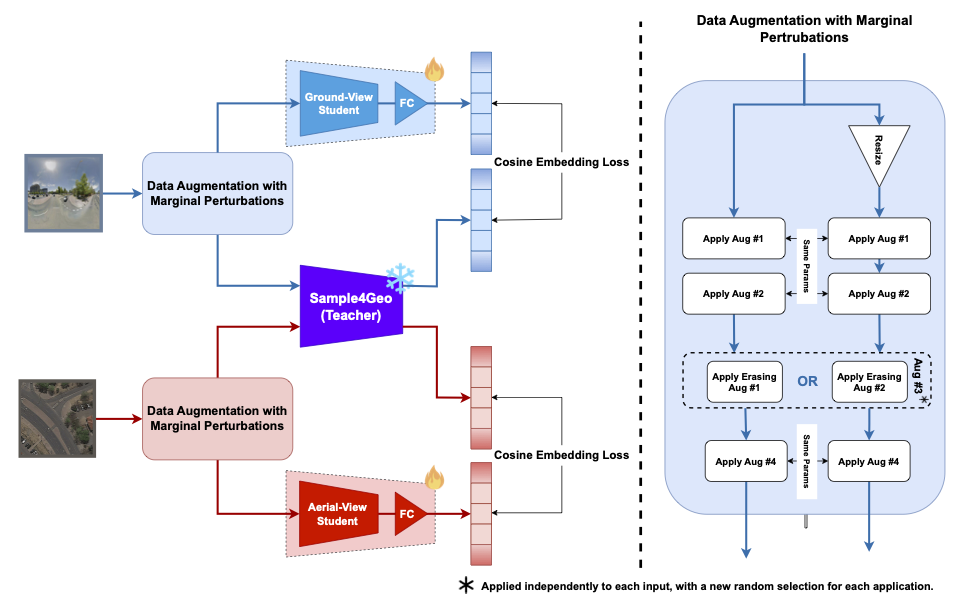

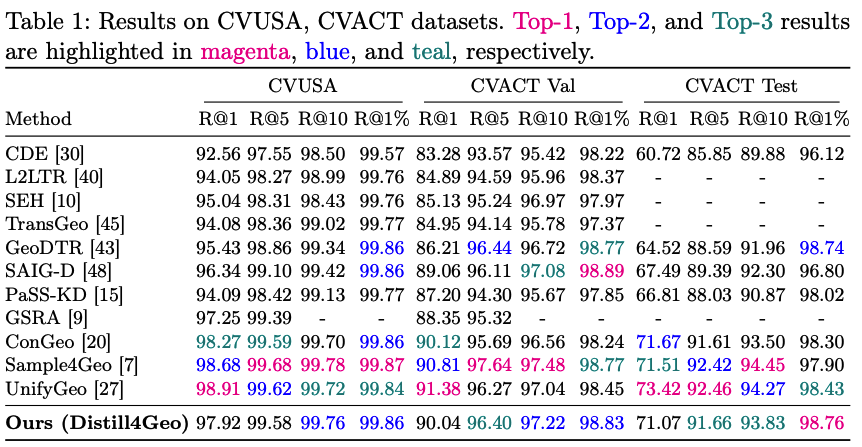

Cross-View Geo-Localization (CVGL) aims to align images from different perspectives (e.g., satellite and street views) to a shared geographic location—a complex task due to variations in viewpoint, intricate scene geometry, and visual discrepancies across views. Current methods commonly employ contrastive loss, which requires matching and non-matching (negative) pairs and often demands large batch sizes, leading to significant training overhead. This challenge is compounded in weight-sharing models which—while typically achieving better accuracy—incur high parameter and computational costs. We introduce a novel knowledge distillation approach that trains lightweight, view-specific student models without weight sharing. Optimized with a cosine embedding-based dual distillation loss, our method eliminates the need for large batch sizes. We also introduce augmentation noise to improve the student models’ pairwise generalization. Our approach reduces parameters by 3x and GFLOPs by over 13.5x, achieving state-of-the-art (SOTA) accuracy on leading cross-view datasets, including CVUSA, CVACT, and VIGOR.

Code: The code is available at Github .

PDF: PDF

Poster: PDF

Text Reference:

M. H. Zahid and M. Taj, "Distill4Geo: Streamlined Knowledge Transfer from Contrastive Weight-Sharing Teachers to Independent, Lightweight View Experts," in Proc. of the Int. Conf. on Neural Information Processing (ICONIP), 2025

Bibtex Reference:

@inproceedings{localizationlensMICCAI2025,

author={M.H. Zahid, and M. Taj},

title={Distill4Geo: Streamlined Knowledge Transfer from Contrastive Weight-Sharing Teachers to Independent, Lightweight View Experts},

booktitle={Proc. of the Int. Conf. on Neural Information Processing (ICONIP)},

year={2025},

}